AI lacks intelligence, but does it matter?

“Orbiting at a distance of roughly ninety-two million miles is an utterly insignificant little blue green planet whose ape-descended life forms are so amazingly primitive that they still think

digital watches areAI Chatbots is a pretty neat idea.” ― Douglas Adams, The Hitchhiker’s Guide to the Galaxy, revised

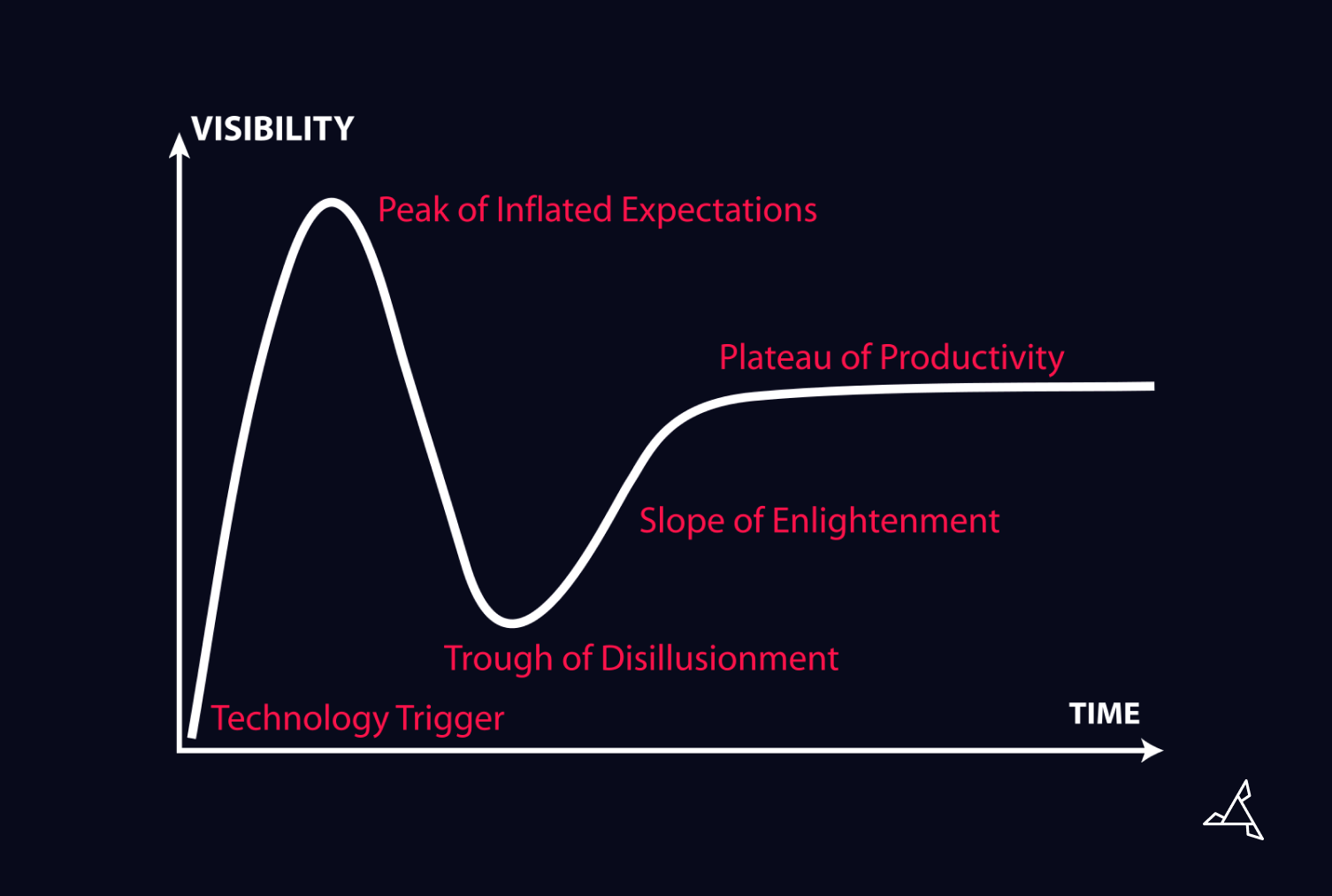

We’re gripped at the giddy peak of inflated expectations of the Gartner Hype Cycle when it comes to AI Chatbots. Except, perhaps, the peak hasn’t been reached yet. Countless articles and videos pounce on every twist in the AI tale. Former UK government scientific advisor Patrick Valance and former Google AI pioneer Geoffrey Hinton both warn of the impact of AI on jobs and on our ability to tell truth from fiction.

At San we can see how AI will change the nature of work and society - another industrial revolution - once a more mature, less ‘hallucinatory’ AI Chatbot arrives at the ‘plateau of productivity’ in the near-ish future.

But for now, we also see a slightly strange pretence going on. Firstly, no one seems terribly bothered that the current generation of Chatbots ‘hallucinate’ - also known as making stuff up, or just, lying.

In order to be able to use AI Chatbots you need to be able to tell whether the answer is true or not. ChatGPT told me I have two Master’s degrees. I know that’s not true (or I’ve become very forgetful).

Secondly, everyone is pretending that you cannot tell AI Chatbot writing from human writing. Which is odd, because we can. We made a simple tool: Find the real writer: Bot or Not to detect whether a piece of writing is human or AI, and it works very well.

Yet there are plenty slightly bogus stories like AI makes plagiarism harder to detect, argue academics, which only mentions deep in the article that the paper was revised by humans after it was written by AI to cover-up a known weakness in AI chatbots.

A key flaw in Artificial Intelligence is that it is currently not actually intelligent, as usually defined: “the ability to learn, understand, and make judgments or have opinions that are based on reason” (Cambridge University Dictionary).

Yes, AI Chatbots do learn as they munch their way through vast data sets. What they learn is what is the likely word to come next for a given topic. They do not understand, nor make judgements, or have opinions.

How easy AI writing is to detect is centrally measured by its perplexity.

“Perplexity is a commonly used evaluation metric in natural language processing (NLP) and AI writing tasks. It measures how well a language model can predict the next word in a sequence, given the previous words. More specifically, perplexity measures the level of uncertainty or confusion that a language model has when trying to predict the next word in a sequence. A lower perplexity score indicates that the model is better at predicting the next word, meaning that it has a lower level of uncertainty or confusion.” ― So said ChatGTP4.

San Digital’s Find the real writer: Bot or Not tool uses perplexity to assess that level of uncertainty or confusion. The lower the perplexity, the higher the likelihood that the text was written by an AI Chatbot.

We threw a lot of different styles of text at it:

We also used different AI chatbots to write in different styles. Yes, we went deep and broad.

It was exciting testing. We iterated many times as different texts threw up wrong answers from early versions of Bot or Not. For example, the classic progressive rock group ‘Yes’ did not employ AI chatbots in writing the lyrics of their seminal track ‘Close to the Edge’ in 1972, as it celebrates the work of Noble Prize winning author, Herman Hess and his novel Siddhartha. Some weirdness is still uniquely human.

Perplexity spotted most of the AI writing by itself, with a secondary measure (part of Lee Crossley’s secret coding sauce) used to adjudicate between humans trying to write in a very simple style and AI writing.

The trend emerged that AI writing tended to be:

For any depth you need to understand the topic and make judgements to get good results out of AI Chatbots because the they just aren’t intelligent. They mimic as certain learning capability which can look intelligent and is certainly very helpful and impressive, but isn’t the real deal.

Is the maths done in a spreadsheet or pocket calculator any less valid than the mental arithmetic done in someone’s head? There are a great many restricted writing styles where the sort of obvious language AI Chatbots are good at are highly prized. Things like:

None of these styles need flourish or personality. Estate Agents, for example, may be well-appointed individuals with a sunny aspect personality, but their house blurbs are already robotic.

Claiming the AI chatbots can write prosaic mundane text is a lot less exciting than some of wild assertions going around. Though, actually, it is a massive achievement and will impact our labour market.

Human language is fiendishly tricky. People play with words. Words mean different things in different contexts. Many words mean the same thing and the same word means different things. Words are invented and fall out of fashion all the time. AI can’t reflect that at the moment.

It’s important, in our hype cycle, to remember that we’ve been here before.

Once upon a time only humans could make pictures. Then machines were invented that could capture truly lifelike images. But we have learnt to live with the camera and artists have reinvented themselves as people who look at the world differently from any photograph.

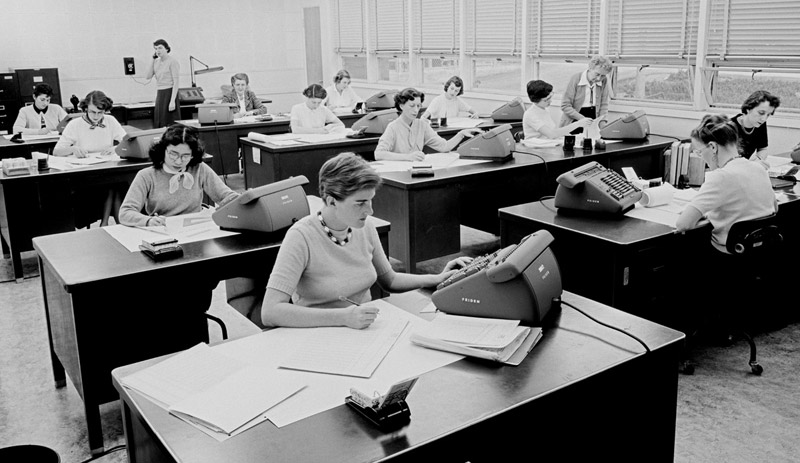

Once upon a time computers where human. They compiled log tables, created firing tables for artillery and even worked for NASA during the space race. Now our computers are digital and allow far greater access to maths problems than was ever possible before.

NASA ‘computers’ working on trajectories, vehicle payload, rocket lift capacity, and planetary orbital dynamics

When I was a teenager I wasn’t allowed to take pocket calculators into exams because it was felt to be cheating. Now academics are worried that using AI chatbots to write essays is cheating.

Like the pocket calculator (and its spreadsheet descendent), I feel pretty confident that we will learn that using AI Chatbots will become an important life skill and you will required to use them effectively in academia as in normal life. In the meantime, our Find the real writer: Bot or Not will help you distinguish between the two, if that’s important to you.

Let’s do something great