Integrating with Events

The San Digital team has worked with numerous organisations in both the public and private sectors to transform their applications architecture into a flexible and business-focused model. Working with events at scale is key to maintaining individual teams’ agility.

In the process is reorganising an Enterprise Architecture its common to split the problem space, the domain, into multiple interacting components or Subdomains. Dividing a problem in this way is a common approach but this terminology is taken specifically from Domain Driven Design (DDD), there is much more to it such as Ubiquitous Language and Bounded Contexts, but they are outside of the scope of this post.

If the Domain is the whole business and all of its processes, then the Subdomains tend to relate to how the business things of itself internally. Slight warning here, how the business or organisation thinks it is structured may not necessarily reflect reality or what is optimal, this is actually the core of transformation.

Transforming a business is by all accounts, very hard, so we’re going to gloss over it here.

The realisation of a particular domain can come in many forms, mostly defined by external factors. The popular view would suggest that each domain is created from a set of micro-services, incidentally no end of confusion stems from conflating subdomains and microservices, the reality is that Subdomains can be realised in many ways. Subdomains may be some existing part of software that handles a significant part of the business, I’m looking at you finance, or they may be an application that contains multiple domains, cough ERP cough.

Once work begins to start dividing domains the next challenge is one of integration.

Three operations are key to enterprise integration: Commands, Queries and Events. All of these operations are transport agnostic.

Requesting that another subdomain perform some operation that changes state is done with a command, the receiving subdomain may perform the operation and respond accordingly, or it may refuse for a number of reasons. The requesting subdomain must be able to deal with all of the possible responses.

A request for information that doesn’t change the target subdomain state, information may or may not be returned but there should be no side effects, to use HTTP terminology as safe operation.

An event is something that happened in the past, its name will always be in the past tense. Anything that has already happened can’t be changed, this may seem a little fatalistic but it’s an extremely useful property of an event as it makes them immutable. Any immutable entity, as it will never change, can be copied without any synchronisation issues.

The path to integration of Subdomains normally starts with Commands and Queries, they tend to be synchronous so are simpler to reason about and fit in easily with the slicing of an application. We put this chunk of stuff over there and then put a call in-between them, useful for a number of reasons but it comes with limitations.

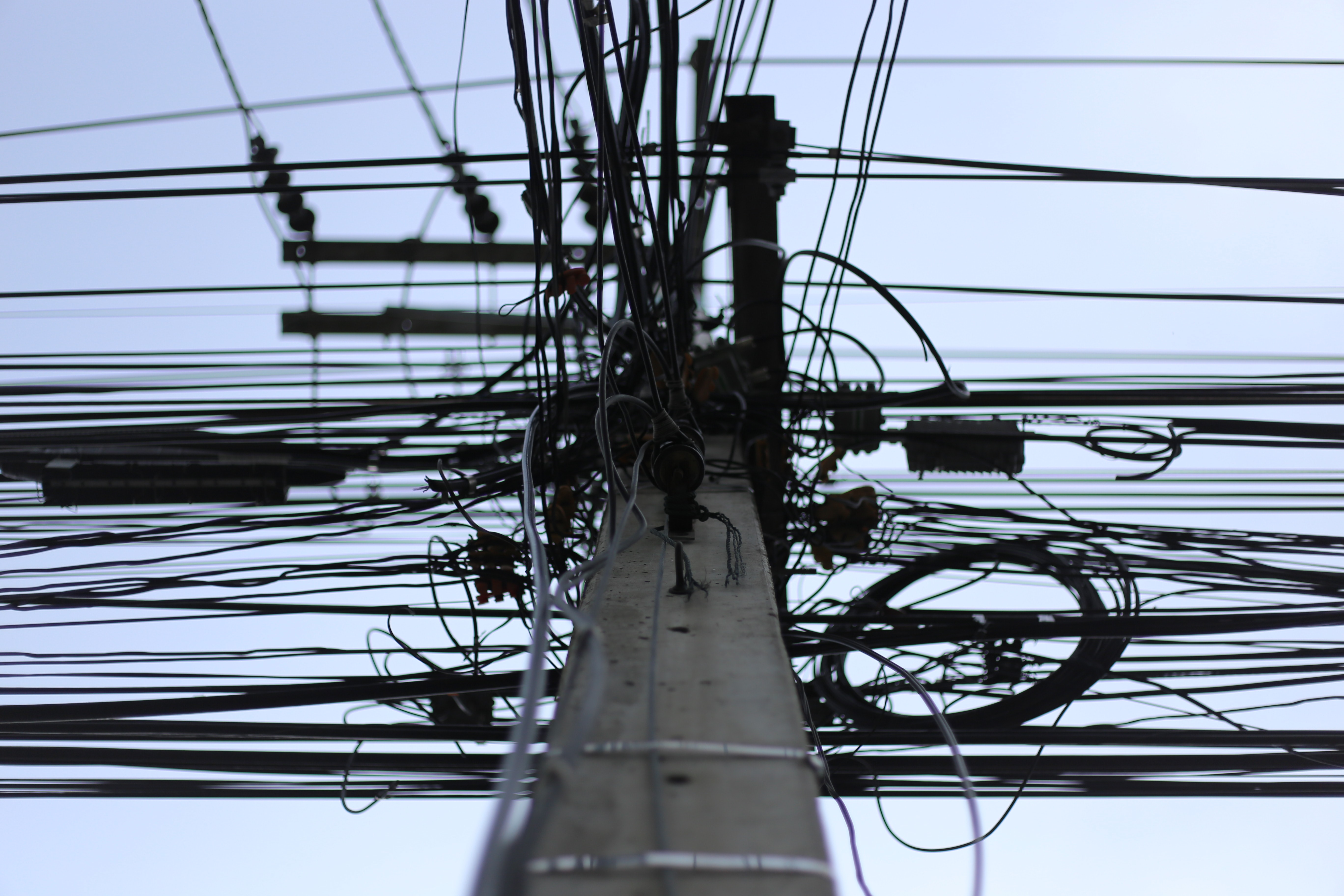

Given enough API calls at some point the issues of fan-out and chained dependency are encountered. With a sufficiently complex system everything becomes dependent on everything else so a call to one domain triggers a cascade of further calls causing latency and error rates to increase geometrically.

To help with this problem and also ease comprehension it seems logical to create something that can pull all of these API’s together into something more usable and purpose specific. There are many different ways that this antipattern manifests itself: API Gateways with extra functionality, Middleware, Experience API’s, ESB’s.

The end result of all of these options is the same, functionality creeps into the central component and out of the subdomains leaving them as anaemic wrappers for storage. At best this results in a new monolith implemented on a proprietary technology with external APIs as storage, at worst the end result is business logic scattered to the four winds with change and progress impossible.

What this architecture is seeking to do is create a view of the whole domain at a point in time but unfortunately undermines the whole point of disaggregation. There is, however, bother way to achieve the same goal.

Instead of centralising information to create a view of domain state, information can be distributed wherever required to achieve the same goal. As mentioned earlier, events having happened already, are immutable and can be distributed freely. Being able to distribute state opens options for integration patters, there is less need for chained API calls and high fan out as it can be arranged for the information to be copied to where it’s needed. A degree of coupling is also introduced as subdomains emitting events are unaware of their recipients.

As we are operating at the level of events and not their transport, we can assume that events once broadcast can be seen by all of the subdomains if they choose and once receiving that information do with it as they please.

If a cross cutting view of the whole domain is required e.g. a single view of the client then this can be created as a read only or query only sub domain that aggregates a large number of events and creates a view of the customer. Query subdomains may initially be anaemic but will likely acquire functionality rapidly as significant information is prioritised and more advanced views are created. This is generally functionality that would otherwise clutter other subdomains more focused on the businesses perspective.

In an event driven architecture ownership of data is key, in order to update any information a command must be sent to the domain that controls both the business logic and masters the data for that subdomain. When that data is changed an event may be emitted. If that information is held elsewhere it’s merely a cached copy that is synchronised via events.

In reality this architecture takes some of the principles of Command Query Responsibility Separation (CQRS) and inflates them into an enterprise scale integration pattern. CQRS tends to be associated with an event store as CQRS/ES but that isn’t necessary with this pattern.

With subdomains relying on events to synchronise state consistency may become a little delayed. The reality is that this is happening in most systems, events just make it more explicit. In most cases eventual consistency can be engineered around and dealing with it tends to make a system more reliable as a whole as anyone who has tried to build a distributed transaction will quickly find out.

Commands, Queries and Events tend to be associated with particular transports; they don’t have to be though. It’s common to see commands and queries over HTTP but it’s perfectly possible to use it for events with things like PubSub and WebHooks.

Commonly events are transported with two technologies, messaging and stream processing. Messaging in various forms has been around for a long time and is frequently confused with eventing as one so commonly uses the other. To distribute messages, they are copied to individual persistent queues associated with a recipient, each recipient then pops items off the queue.

Stream processing uses a single log with each client moving a point along the log as it processes messages as they are processed, reducing the need for write access to storage can increase the performance of stream processing technologies.

With most stream processing options it is possible to create operations that act directly on the stream itself, there are multiple mechanisms for this, these operations are invisible to the recipients that are only aware of the events they receive. Stream operation may be simple transformations that convert one kind of event into an alternate format, or they may be more advanced operating over multiple events e.g. this event has happened three times in the last ten minutes emit a new kind of event.

Care must be taken when implementing stream operation that excessive business logic doesn’t migrate out of domains. Advanced stream processing can be very useful for business information and operations.

As with any interface events will change over time so some accommodation must be made for consumers requiring alternate versions. The simplest method enforces backwards compatibility in events, after time though this can become problematic. To accommodate breaking changes, it may be necessary to emit multiple versions of an event or use stream processing to duplicate transparently. As with most problems of this sort the real solution is collaboration between the teams and a commitment to maintain and evolve all of the subdomains.

In some very secure environments, it may be that the transport mechanism cannot be secured sufficiently to mitigate the risk of access creating a man in the middle attack. In this case a “thin event” can be sent that contains the URL linking to a resource representing the event, this resource can then require the credentials of the accessor to be presented before returning information. This mitigation is very much a last resort.

Integrating at enterprise scale can create as many problems as it solves unless the care is taken, and all of the available toolkit is used.

Let’s do something great